Let’s say you have a lot of crawl budget assigned to your domain and you want to use it to benchmark your own Core Web Vital metrics against your direct competitors. Using Chrome UX report APIs is OKish for domain level metrics but you obviously want more data.

Step 1: Crawl your competitors website to be able to map all URLs they have to get a full picture.

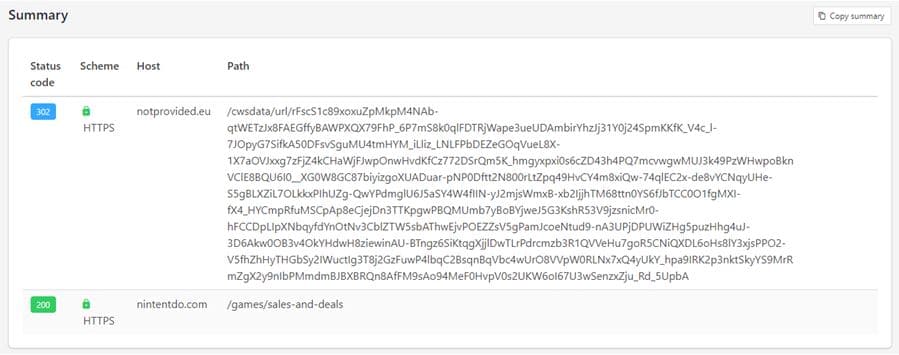

Step 2: Add a directory or subdomain on your own domain that contains all those URLs. So if the original URL is https://www.nintendo.com/games/sales-and-deals/ make something like https://www.notprovided.eu/cws-data/nintendo/games/sales-and-deals/

Step 3: Add 302 redirects of these URLs to the original competitors URL

Step 4: add a GSC profile for the specific competitor folder of URLs in, in this case I would add https://www.notprovided.eu/cwsdata/nintendo/

Step 5: add a HTML sitemap containing all the URLs within the competitors folder so Google can actually find them organically by crawling oldskool. Buy some forum profile links, because yeah, we do oldskool SEO here.

Step 6: add a XML sitemap containing all those URLs to the newly created GSC profile so you have some visibility on how slow Google can be with crawling and indexing shit.

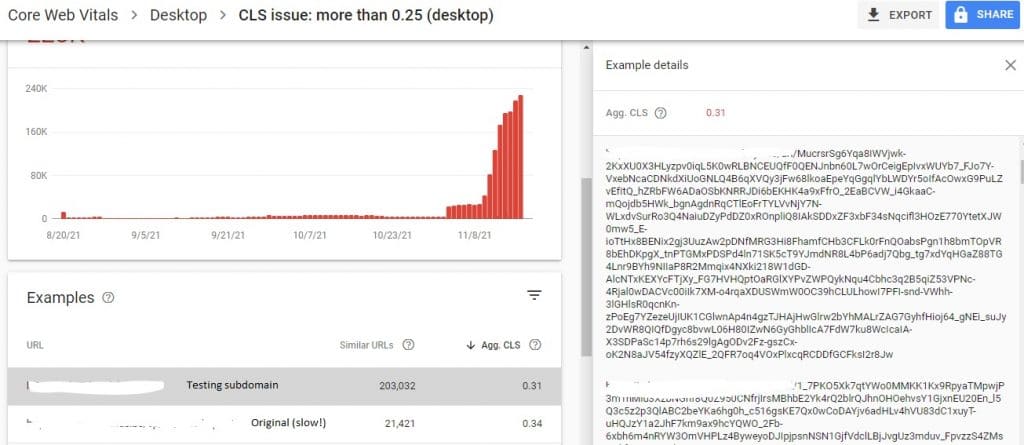

Step 7: once you had a burst of crawls from the ever happy Googlebot, head out to your CWS dashboards:

Why does this work?

Because with temporary 302 redirects, Google attributes everything from the final destination URL to original URL. That works with onpage elements like meta title and description, but also with reporting CWS data in Google Search Console. 302 redirect hijacking is not something new, so if you find it interesting, search some SEO blog archives for it.