I’ve been creating a lot of (data driven) creative content lately and one of the things I like to do is gathering as much data as I can from public sources. I even have some cases it is costing to much time to create and run database queries and my personal build PHP scraper is faster so I just wanted to share some tools that could be helpful. Just a short disclaimer: use these tools on your own risk! Scraping websites could generate high numbers of pageviews and with that, using bandwidth from the website you are scraping.

Scraper is a simple data mining extension for Google Chrome™ that is useful for online research when you need to quickly analyze data in spreadsheet form.

You can select a specific data point, a price, a rating etc and then use your browser menu: click Scrape Similar and you will get multiple options to export or copy your data to Excel or Google Docs. This plugin is really basic but does the job it is build for: fast and easy screen scraping.

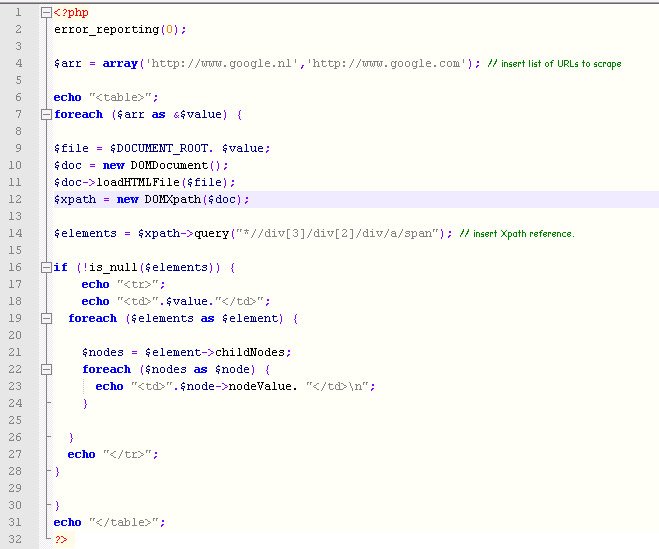

2. Simple PHP Scraper

PHP has a DOMXpath function. I’m not going to explain how this function works, but with the script below you can easily scrape a list of URLs. Since it is PHP, use a cronjob to hourly, daily or weekly scrape the desired data. If you are not used to creating Xpath references, use the Scraper for Chrome plugin by selecting the data point and see the Xpath reference directly.

– Click here to download the example script.

3. Kimono Labs

Kimono has two easy ways to scrape specific URLs: just paste the URL into their website or use their bookmark. Once you have pointed out the data you need, you can set how often and when you want the data to be collected. The data is saved in their database. I like the facts that their learning curve is not that steep and it doesn’t look like you need a PHD in engineering to use their software. The disadvantage of this tool is the fact you can’t upload multiple URLs at once.

4. Import.io

Import.io is a browser based web scraping tool. By following their easy step-by-step plan you select the data you want to scrape and the tool does the rest. It is a more sophisticated tool compared to Kimono. I like it because of the fact it shows a clear overview of all the scrapers you have active and you can scrape multiple URLs at once.

5. Outwit Hub

I will start with the two biggest differences compared to the previous tool: it is a softwarepackage to use on your PC or laptop and to use its full potential it will cost you 75 USD. The free version can only scrape 100 rows of data. What I do like is the number of preprogrammed options to scrape which makes it easy to start and learn about web scraping.

6. ScraperWiki

This tool is really for people wanting to scrape on a massive scale. You can code your own scrapers (in PHP, Ruby & Python) and pricing is really cheap looking to what you can get: 29USD / month for 100 datasets. You are completely free in using libraries and timers. And if your programming skills are not good enough, they can help you out (paid service though). Compared to other tools, this is the most advanced tool that offers the basics of web scraping.

7. Fminer.com

This tool made it possible to finally scrape all the data inside Google Webmaster Tools since it can deal with JavaScript and AJAX interfaces. Read my extensive review on this page: Scraping Webmaster Tools with FMiner!

But on the end, building your individual project scrapers will always be more effective than using predefined scrapers. Am I missing any tools in this sum up of tools?

Its like you read my mind! You appear to know a lot about this, like you wrote the book in it or something.

I think that you could do with some pics to drive the message home a bit,

but other than that, this is magnificent blog. An excellent read.

I’ll definitely be back.

Thanks for the mention. Its always nice to see the being being talked about ‘in the wild’.

Say Hi in person if you are around London sometime.

You could also try using GrabzIt’s on-line screen scraper (http://grabz.it/scraper/) tool, which is easy to use and free.

Thanks for the mention!

Hi,

I was looking for a tool I could create product feed directly from my webpage. Does someone heard about such tool? The only page I have found is feedink.com. I wonder if there are other tools like that? Can somebody recommend something?

Best regards,

George

Hi George!

What kind of feed do you want and where do you want to use it for? Kimono Labs supports API’s and with that feeds, maybe you could look into that!

Hi,

I want to find the best sellers on amazon beyond the top100 that they publish; every listing has a best seller rank – bsr, which shows the position within a category (there are many categories).

I have a great search term for google that finds all the listings with bsr between 100 and 200 on amazon and i have scraped the results into excel… so

now i am looking for a scraper that will go through my excel list of amazon url’s and bring back the bsr# and category.

do you know which tool is best for this?

thanks in advance for your help.

Try import.io or Kimonolabs. Once you have setup a system to get the data from one URL, you can tell the tools to scrape all the URLs based on your Excel list.

At your recommendation I gave import.io a try and I must say, your recommendation was excellent. Thanks for the tip. The Perl script I had run was instantly detected and shut down and the Chrome plug-in I tried had a moderately-high learning curve.

Import.io was a snap.

Thanks again and much respect,

-Jon

Thanks for the sharing your knowledge.

I need to weekly download the prices of stocks from ft.com

Please, what web scraping tool would be the best to do it?

Thanks

iil888

I would use Kimonolabs for a job like that!

Great list and thanks for sharing the information with all of us.

I am starting a webpage that has an events section, many of the events locally are on govt sites (ie stadium,concert hall, and parks) OR the people who are running the event WANT it to be shared/copied/scraped as much as possible.

Can you recommend a combination of website platform and web scraping tool that work well together?

Thanks!

I would use Import.io because of their integrations clients they have available https://import.io/features so you can integrate data live.

Will check them out for sure! Thanks for the quick reply.

Hi!

I just want to throw our APFy.me into the mix if you don’t mind. It allows you to turn any URL into a XML/Json-API with markup validation (if anything important changes in the source HTML).

As a consumer you get a nicely formatted API to work against and all the parsing, formatting and validation logic is configured in APFy.me.

Is there a good tool to screen scrape authenticated pages?

Most desktop based scrapers can handle login forms.

Yes import.io will do that

Wel, I tried 2 different .aspx sites that require identification. One didn’t get through the identification, the second did but did not find any data……Nothing better then building your own. My experience is that you can “talk” to websites in several ways, you only need to find out what “language” your target does understand…

Hi,

Wondering if you could recommend an alternative to ScraperWiki for scraping twitter followers since they don’t seem to be able to do it anymore?!

Could be a problem with the limits of Twitter allowing data transfers. You could try http://www.giuseppepastore.com/en/get-twitter-followers/

Hello,

I am interested in a LinkedIn search export tool that would export my LinkedIn search into Excel.

Any software that you would recommend?

I tried building my own scraper but was unsuccessful (it only pulls in my profile data and not the search results).

Can you specify your search? Must not be to difficult to scrape the results, if you just want to export you can simply use the Scraper Chrome plugin.

Hi,

Thanks a lot for your post and I found it very helpful! May I ask if I want to constantly save some tables on a website (for example every 10 minutes save a copy of the table contents) on my hard disc or somewhere on their server. So that later I can access all these tables when I want to (no matter in what format they were saved) , which tool do you think is best for this?

Thanks a lot for your help in advance!

I would write a PHP script that saves the plain HTML for that, which you can run every 10 minutes via a cronjob.

I have large amount of historical HTML files (181,000+) of various size (1MB to 25MB each) that I need to scrape for some information. The source files were submitted by many different sources so the files are not consistent in their structure. They were also apparently a merging of files as some lines are standard HTML one line per line and some have entire files within files that have lines of 52,000 chars wide. Is there a way to take a file and simply write the HTML for what is written to the screen in standard HTML? Then I could scrape the resulting file or process it with other tools…? I appreciate any feedback at all. I have all the HTML files on a local hard drive.

json-csv.com is a good tool for extracting the data from json feeds

I have used Mozenda.com for years. It works well. Would you mind taking a look at that solution Jan-Willem? It has been written and talked about in many SEO conferences and blog posts. http://mitchfournier.com/2011/05/17/distilled-pro-seo-seminar-day-one-recap/

Hi Jon,

I actually tried the (your?) software and was not happy with it. Could be because some of the jobs I had to take care of, where better doable with other software or scripts but I didn’t got the best results with Mozenda.

Yes Jan, I should have been more transparent. I have been a customer of Mozenda since 2008. Using the software for clients. I found the software so simple and powerful that I called them up one day asking if I could invest. I am now an investor in the company so my views may be a bit one sided. I am sorry you had a bad experience with the software, but if there is anyway I could help you walk through it, I will. Mozenda has over 25% of the Fortune 500 companies as customers so please consider trying Mozenda again when you write another web scraping review.

Hi Jon,

your “mozenda” thing is outdated … start by removing that “start free trial” button first, it scared me! you are also offering few scrapping capability compared to your competitors, e.g: import.io is offering unlimited pages on free plan, and kimonolabs.com 20M requests monthly! I am Software Engineer trying out scrapping options for my incoming app, to be honest I can’t ask more what import.io and kimonolabs.com are promising(I need to have just a 10 million of raw records). Thanks Jan for sharing this, you are awesome.

I think you are correct Pascal if you are looking for a free solution, Mozenda is not the product for you. If you are looking for great customer support and clean reliable data then Mozenda is a better fit. I have tried the tools you mention and you do get what you pay for.

Mozenda is expensive and full of bugs – I have crashed it numerous times with simple edits and they claim they no nothing. If your an investor – I would find out the issues with desktop version which is incredibly slow and chock full of bugs.

Hi,

I have been researching around trying to find a way to monitor and analyze data from 15 URLs for hourly information on NFL players(400) on Windows using Chrome. I spent some time going thru python w/scrapy but have run into a few road blocks in the learning curve. I recently was looking at youTube videos on Excel VBA Web Scraping. Any suggestions? My URLs are not using formatted data so each requires a “view source” or “inspect element” to determine the string patterns.

Thanks,

Rob

My previous post comment had the – are not “” formatted removed when talking about the URLs I am trying to scrape.

At import.io we announced a $3M seed+ round of funding and a new interface for building ultra quick scrapes/APIs. If it’s quick extractions you want; now you can often build Datasets in under 40 seconds, or take your time and dip into the advanced tools like xPath, Regex, and Javascript toggling etc..

Hi,

My question is similar to Anamika’s.

I need to export the LinkedIn searches for various companies in an excel sheet. For this, I need a scraper which would automatically scrape that in excel once a search is done.

I’m also unaware of the benefits of Google web scraper plugin.

What to use?

Hi! I am looking for a scraper that will allow me to upload unique files numbers and download the result in to an excel file. For example, upload an address then pull specific information from the web page regarding the property. I can find some very expensive solutions but an looking for a simple and inexpensive solution.

Thanks!!

Hi Eugene,

Try Excel, install the plugin SEO for Excel by Niels Bosma and use the different scraping functions: http://nielsbosma.se/projects/seotools/

Hi,

Is there a way from which i can get the product url, product name , product image url and product’s price into an excel file for any ecommerce website?

All the products present on the ecommerce website?

Hi Deepak,

First use a tool like Xenu or ScreamingFrog to crawl the website and filter out all product pages. Next you can use SEO for Excel and scrape all the data you want.

Can we automate this process? I mean can we craw the entire site and pickup relavent/specific data(not all) only product URL,product prize, product image and keep updating the database?

Also can share some insight into How product aggregator website get product details?

What would be the best scrape tool/API to get the data related to travel spots, pilgrim area information, hotel data like contact info etc…?

I am a newbie to this. I want to import some stock information into numbers from Yahoo finance which is not on their download parameter list but on another page, so I guess I need scraping to get the next ex-dividend date and put it into my spreadsheet. Is there a ‘simple’ way to do this? I hope this is an ok question for this forum as everyone here is more advanced than I am

You should take a look at http://blog.import.io/post/create-an-app-in-10-minutes-or-less

Hi. I have used Mozenda before and after a lot of strugeling it did work. Now I have tried Import IO and I like it a lot. Is there a way to make it work on multiple queries from an excelfile like Mozenda can? Or do you have any other free scrapers to recomend that have this feature?

Best regards!

Hi Andreas,

Have you tried the scraper function within Import.io? This can do the job based on pre defined lists for example.

Hi Admin,

I have a site to scrape but it throws a captcha security after 25-35 consecutive access, which make it hard to automate. I saw some captcha decoder apps available on the net but I haven’t tried it.

Do you know a web scraping tool that has a built-in captcha decoder?

You could try FMiner, that has a built in captcha solver 🙂

Hi,

I am new at this and want to get info from LinkedIn search results, not to open one by one. Parameters i am using are: full name; LinkedIn url; current position; company.

Any easy way to do it for a layman who does not know programming?

At the moment i am using Chrome scrape to select name (page by page) that gives me full name and LinkedIn url.

Best,

Zoran

For that, setting up a model in Import.io will do the job, you can actually user their scraper functions to do the work after you trained the systems to scrape the data per page.

I tried Import.io but it will not work with LinkedIn. As soon as you switch the ON button the LinkedIn logs out so i did not have the chance to see how it can be done

I would like to scrape various sports websites for basic sports information (scores, stats, etc.) and have results in a rss/xml format.

And looking for a tool that is simple and cost effective to use to do this. What would you suggest?

I think Import.io is the quickest tool you can use for that since you will need to build scraper for every different website you want to use. Just in 10 seconds I have the data from BBC available: https://magic.import.io/?site=http:%2F%2Fwww.bbc.com%2Fsport%2Ffootball%2Fresults

Is there a scrapper that can collect links from website and filter them by some parameter (e.g. price)? I want to collect advertisments, but price must be less than setting value (e.g. 100$)

You could build one yourself in PHP in combination with the Import.io APIs? You can train a two models in Import.io and via the API you can filter the results. One extractor to find all the links to all the advertisements and their prices, the second extractor can extract the specific data per advertisement.

Thanks for your answer! PHP is powerful thing but more prefferable avoid coding. Isn’t it? May be exists app that can load data extracted from website (csv or json) and analyse it, apply filters and so on.

Hi Jan,

thanks for sharing. I must say that I wasn’t aware of some of the tools you mentioned. Although, I would argue why the Simple PHP Scraper is on the list as it is not an actual tool as requires coding. In case we want to include Web Scraping libraries you should definitely add Scrapy and Selenium. You can find more here: http://blog.tkacprow.pl/web-scraping-tutorial/

In my opinion no Web Scraping tool out there is good enough for scraping any kind of content. I encourage every (even beginner) Web Scrapers to learn at least doing simple XML HTTP requests in Python or VBA. The learning curve is almost just as flat as for any of the other tools you mentioned.

I do agree with you on building custom made software but many people are not capable of programming. Hence the succes of tools like Import.io. Personally I combine the scalability of Import.io with custom made PHP scripts to process and handle the data via their APIs

Hi Jan,

Great article.

What do you think about this one: http://www.uipath.com/automate/web-scraping-software ?

Hi Mihai,

I’ve tested it three years ago, but maybe I should test it again, thanks for pointing it out!

Hi Jan,

its a very nice article I found while searching for a solution to my problem.

I´m building a website for my local table tennis club and look for a efficient way to scrape the web, or at least a list of defined urls, for interesting news on the topic table tennis. As I am not a very skilled programmer (just a beginner) I am look for the best way to achieve this.

What would you recommend?

Thanks for a short notice

So you want to built a list with interesting Table Tennis news posts? First thing you should do is to built a unique scraper for every website you want news from. Import.io has possibilities to combine those unique scrapers into one feed of data via their API connections.

Hi Jan-Willem

A bit of shameless self-promotion here – but would love your feedback on our recently launched tool at cloudscrape.com. It’s 100% in-browser point-and-click with the added power of being able to navigate and manipulate the pages being scraped in any way you might need to get the data you want. Hope to hear from you – thanks!

Thanks Henrik, always curious to try new tools so I’ll definitely will have a look!

I’m looking for a tool that will scrape password protected pages. In other words, I have an account that gives me access to data in a tabular form (with multiple pages of results). I want to scrape that data, and the data from the links in that table. BUT I don’t want my username & password saved. Can you please recommend a tool that would handle this. I tried import.io but I got the impression that my username/password would then be available for the general public…

Thanks!

Import.io will be a save option. If you really don’t trust the companies, use a desktop based scraper software package like UIpath or FMiner.

Hi Jan,

https://Scrape.it is a chrome extension that you install on your chrome browser. It does not require you to input passwords and does not store these. It is able to scrape whatever webpage you are logged in or logged out of automatically.

Unlike Import.io or Kimono, Scrape.it is a browser which is able to render any web page you come across.

On top of that there is great level of service to help you specifically with your web scraping needs. Just send an email and you will get a reply very quickly from me.

Hi Jan! I have to collect the number of followers of a brand in different social networks each week. I tried import.io on Facebook but it does not create the API (I guess some security reasons?), do you have any suggestions on how to do that? My problem is that web scraping is usually multiple data entries on one page, while I need a single info from different webpages… Thanks!

In most cases I create an API and use secondary scripting, for example PHP to go through a list of specific websites. Not sure but I think you can publicly crawl Facebook brand pages for the overall data points.

Thank you for such an informative piece. Does any of the tool you’d mentioned allow scraping, WITH file downloads (e.g. a web page points to an excel file, which the tool scrapes the data on the page, it also downloads the excel file which it points to)?

Thank you Jan-Willem.

Jimmy

jimmy@admango.com

Not that I am aware of. You could built an easy PHP based solution though. For Python there are a few solutions floating around the internet http://stackoverflow.com/questions/13038012/python-web-scraping-download-a-file-and-store-all-data-in-xml

Hi Jan, Thank you for list, I am providing professional services to scrap data using Java or unix script for that I am using Jsoup till date, but I am looking for any tool which can provide me API, to create and distribute scraping utilities, but Jsoup has limitation it can not parse ajax data or dynamic data with authentication. Do you suggest any API provider which allows such kind of development?

YEs, try Import.io

Hi Jan! Thanks for this very insightful post.

Do you know any free tool where I can scrape using the same functionality OutWit offers with its “Marker Before” and “Marker After” elements?

Thanks!

Nico

Hi Jan,

Thanks for these insights.

I am working on a project which will need a tool that can scrape ticket vendors, performer websites and event aggregators.

We require general data (i.e. name event, image, date, location, description, ticket price, ticketshop URL).

Do you have any expertise recommendations for these specific data requests.

Many thanks in advance..

Jon

Hi Jon,

This completely depends on the source websites. Per website you probably need to develop a specific scraper.

Wow, I am blown away by your page, LOTS of REAL conversation and data.

Hello,

How are you? My name is Jon and I am trying to figure out a command line entry for Goosh

that inputs a search term and outputs to CSV.

The most info I have found thus far is: http://stackoverflow.com/questions/22033394/how-to-search-goosh-org-from-the-command-line

Any thoughts? My goal is to be able to input a list (items to search) and output the found URLs

My first is Google Maps to get the data fields with the longitude and latitude, for example:

https://www.google.com/maps/place/Hop+Aboard+Trolley/@34.1559669,-118.7473257,17z/data=!3m1!4b1!4m2!3m1!1s0x80e826b788d5b3a9:0x2c826704fdaeb104

The longitude and latitude is in the address bar and the additional fields are displayed below:

1050 W Potrero Road, Westlake Village, CA 91361

hatrolley.com

(818) 351-6242

Open now: 7:00 AM – 1:00 AM

Suggest an edit

I am contacting you because I know IF ANYONE knows – you would know. Please can you offer any direction of where I may become educated in this?

Please.

Thank you.

Hi there,

thanks for the tips it is a very good read.

I’ve been using import.io for a week it’s really good for extracting data from a website without identification but unfortunately it doesn’t do Authenticated pages anymore (apparently it used to but they gave up on it).

Can someone recommend a good scraper that handles authentication?

Thanks!

Tibo

Hi Tibo,

I’m not aware of any scalable free tools that are capable of the same things the old Authenticated APIs of Import.io did. Have you tried Kimono? How are your programming skills? Try Node.js or Python -> http://shrew.io/post/89337937049/scraping-websites-with-nodejs-authentication

Wow, I am new to the world of Website Scraping and found this article very informative.

I have a question though… I have started to use a Chrome Extension called webscraper.io which seems to be pretty good for basic information however the data I need is a further level down (not sure on correct terminology) and it does not appear to cope with this. Also it sometimes finishes the scraping with no results, even though it worked a couple of mins ago. (no change to code and it starting working normally again)

Anyone have any better solution?

Hi,

It really depends on the amount and sort of data you want to scrape. Could you share an example?

Jan-Willem

Hi admin

Thanks for the reply.

My aim is to scrape a community website which acts as a type of “hub” for other websites.

The main site is call Enjin and the page I want to start at is located at: http://www.enjin.com/recruitment

This page lists multiple subpages where on each of the subpage are details about external websites.

There are links to the external websites which holds membership details.

To paint a picture, this is what I would like the scraper to do:

1. Go to http://www.enjin.com/recruitment

2. Go to each game category within the above link

3. Go to each gaming community and follow their link to their website.

4. Navigate to the members page on the gaining community website

5. Go to each members page and copy basic profile information

6. Repeat process for each game community and for each game category.

The end result would hopefully show these columns:

Username on external community website

External Community name

External Community website

Details of user profile

Game Category

I realise that the results will show more than the above, when using pagination, but essentially the above columns are the ones required. Anything more is just a bonus.

I have used the Chrome extension “webscraper.io” to build a code, which appears to start working then the Enjin website (main webpage) displays a page error.

Maybe they have an anit-webscrapping resource in their website?

Any help on this is greatly appreciated.

Kind regards

Frost

Hi Frost,

I would use Import.io. First build an API via a connector (that is able to mimmick user behaviour like clicks) for the overview pages to scrape all the community links. Next you can use an Extractor, with the source URLs from the previous created API to crawl the individual member pages. They all have the similar structure if they use enjin.com platform. The problem with this is clans that have used different platforms, like lostheaven.com.pl for example. You’ll need to build an extractor for every unique system.

Hi again

Thanks for the advice!

I tried the Import.io application however it did not seem to work.

I assumed the Enjin site recognised it as ‘non-human’ activity.

I have decided to carry on with the Chrome Extension “webscrapper.oi”, and I am more comfortable with navigating how to use it.

The next issue is the CAPTCHA security that Enjin has.

I think it recognises if a request is not human.

Any way around this?

I tried to extend the milliseconds of requests and refreshes per page but have run out of ideas.

Any way around this CAPTCHA screen?

Again, many thanks!!

Frost

hi

this is ridiculous.

The best way on non-javascript-heavy websites is by far (php) curl with xPath. For JS-protected Websites like online-banking i advise to use Selenium RC (Server) with headless PhantomJS. This is how it is done, forget the desktop tools, those are overpriced, limited and for developers just ridiculous.. my 2 cents don’t know why google put me on this page ..

The average marketeer does not have developer skills. Does not mean that the solution that you propose is a good one. Any references you can share?

I am not a developer and my skills with coding are VERY limited.

I think this page offers helpful resources for the majority of the people that come by.

It would be a total waste of time to learn all about coding when all I want is a simple data extractor.

Learning all the coding for such a small task would be like someone wanting to learn to change a tire, but being told to learn how to change wheel bearings first.

In my opinion your “2cents” is of greatly depreciated value here.

In my opinion, the thing you want are not just an average scraping task. Scraping movie reviews from IMBD, for which every page has the same setup, is an easy task. You’re dealing with an endless array of different websites you want data from.

Hi, thanks for this article. I am a beginner and basically just want to get an email address for a list of websites. Can you recommend a good tool for that. you response is highly appreciated.

Are it all different websites? Maybe you can get something out of https://www.import.io/post/get-more-leads-with-web-data/

Hi Jan-Willem,

I really liked this post, thanks. Our data scraping tool detects dialogue (both direct and indirect) within bodies of text, extracts each quote and attributes it to the correct speaker. This is a great tool for an analysis of media coverage – is it something you’d be interested in using? Or could you recommend someone who might be interested in trialing the tool?

Hi Kirsty, I normally get a lot of spammy comments mentioning crappy tools but this one looks interesting. I’ll have a try and see what it can do for me! Thanks

Hi Jan-Willern,

I have a list of website URLs. It’s an excel file saved as .csv file format. I want to extract the Phone numbers of all the URLs. I had tried Octoparse to do that and follow this article,

http://www.octoparse.com/blog/how-to-extract-phone-number/.

But I’m told Octoparse can just deal with all the webpages with similar layout. Well, can you tell me what programs can do this?

Thanks.

I’m unaware with software that works based on the pattern of phone number recognition.

Maybe you want ti give extracty.com a try? It is also a good web scraping tool!

I just tried it for 8 websites, none of them was working. Even the examples mentioned at their homepage don’t work.

Kimono is not longer available. It got bought out and closed down leaving everyone who relied on it screwed.

There’s some new web scraper like Octoparse.

It’s relatively trivial to code a scraper so I would never use an automated scraping tool.

Thank you for this great information. I’m a marketeer with zilch coding skills. I’m interested in running sentiment analysis on very sticky community forums with levels of conversation going back several years. Each conversation thread is several pages deep. Would you please recommend a tool to do this?

What about Hurry Scrap ? You also should check this one !

https://chrome.google.com/webstore/detail/hurry-scrap/akkkdfbnncpligajlllmbhkejnligpnb

Hi Jan-Willem,

Great article, loved reading it (and the comments)

I’m the founder of easy-scraper.io a new startup in the field of web scraping. We offer a devtool which currently is the only web scraper in the world that handles Javascript in the background without any user effort. We also provide an API which is very easy to use. For example to get all the links from this page you could append the “element” parameter in conjunction with the endpoint:

https://api.easy-scraper.io/?url=https://www.notprovided.eu/7-tools-web-scraping-use-data-journalism-creating-insightful-content/&element=a,a%5Bhref%5D

To scrape it into an Excel file you simply provide a parameter “output” with the value xlsx:

https://api.easy-scraper.io/?url=https://www.notprovided.eu/7-tools-web-scraping-use-data-journalism-creating-insightful-content/&element=a,a%5Bhref%5D&output=xlsx

Love to here your thoughts on our new startup and whether we can stand up against your tested web scrapers.

Kind regards,

Youssef

Hi Jan-Willem.

My name is Jacob Laurvigen from dexi.io. We developed and launch a web scraping and data refinery tool (pipes). As we (obviously) believe that we have create the best web scraping/data extraction tool available, It would be great if you could evaluate our tool and ad us to the list? https://dexi.io

Let me know if there is anything I can do?

Kind regards, Jacob Laurvigen

Hi I found your article while researching for a software. I am looking for a crawler that could find the linkedin accounts of the companies. I have here the website urls. I need a software that, if I enter these urls it would generate the linkedin accounts of theses companies. Can you suggest a software that could do exactly like this one? Thank you.

Hi,

I’ve been playing around with scraping eBay and Amazon for ranking of listings. Using Parsehub has been great for Amazon but when it came to eBay the listing returned where completely different due to their location being in Canada. I have the same problem with grepsr chrome extension.

Are there any scrapers that you can lock the location on or are UK based?

Cheers

Hi Peter,

A tool like https://www.webharvy.com/tour8.html has an option to add proxies. SO you should find some reliable local proxies first and then use a tool that can handle them.

Hi Jan-Willem,

Im Mikhail Sisin from Diggernaut cloud web scraping service (http://www.diggernaut.com/)

first of all want to thank you for your efforts to make a web scraping scene brighter for newcomers. Was wandering if you are planning to release new version? There are 4 years passed, a lot has been changed. New and perspective players appeared, some services and tools are not exist anymore. It would be really nice if you could find time for it and give community updated comprehensive review.

Thanks again.

Isn’t Proxies kind of mandatory for web scraping? From my experience you just get blocked pretty quick and you do need to have proxies to overcome these blocks. Or does it matter on the scale of the scraping you do? I have been using oxylabs proxies before not sure if you can implement proxy switching on any scraper or once again the necessity depends on the scale?