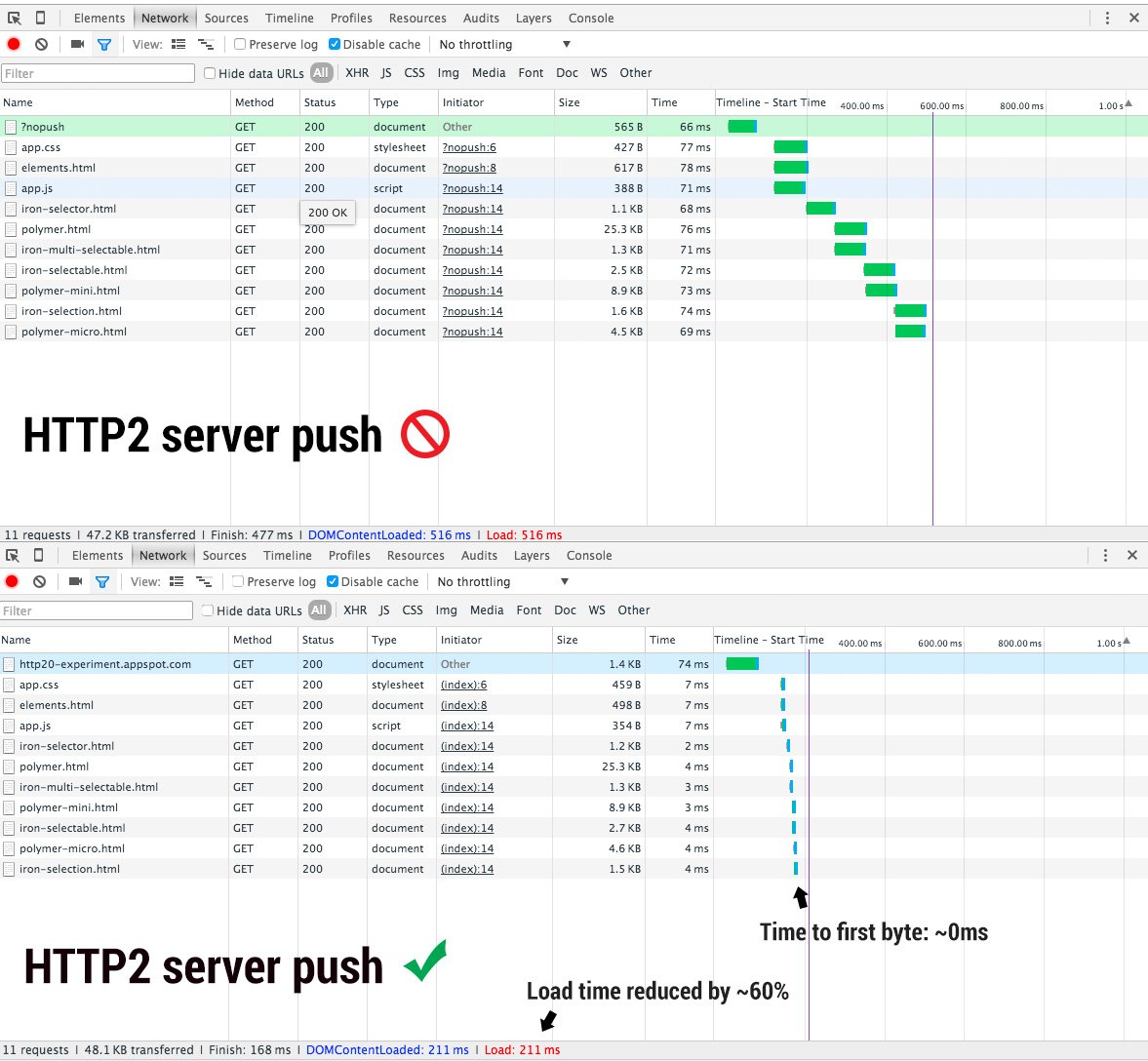

When a browser requests a page, the server is sending the HTML as a first response. Then the browser starts working and parses the HTML to find all kinds of embedded assets like JavaScript and CSS. The browsers needs to go back to the server again and ask for these files. Server Push allows the server avoid this round trip and push the files directly together with HTML so no valuable time is lost. Many cloud hosting providers like Cloudflare start supporting HTTP/2 Server Push already: Announcing Support for HTTP/2 Server Push

Sounds great right? There is only one small problem: caching doesn’t work properly since Server Push is not aware of previous visits and will not check cached resources. Server Push always tells the browser that it is should receive the files from the server, whatever happened before.