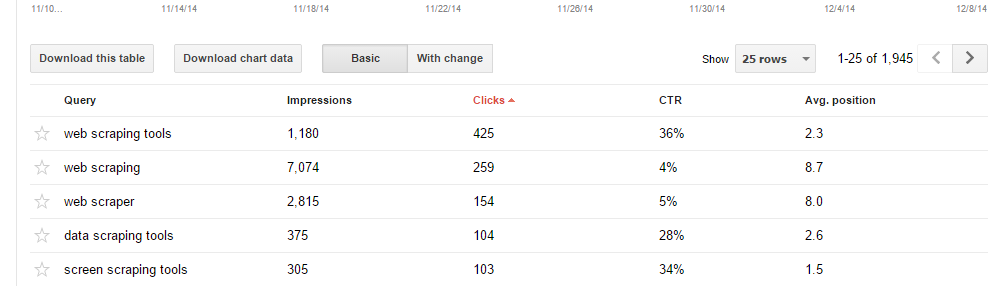

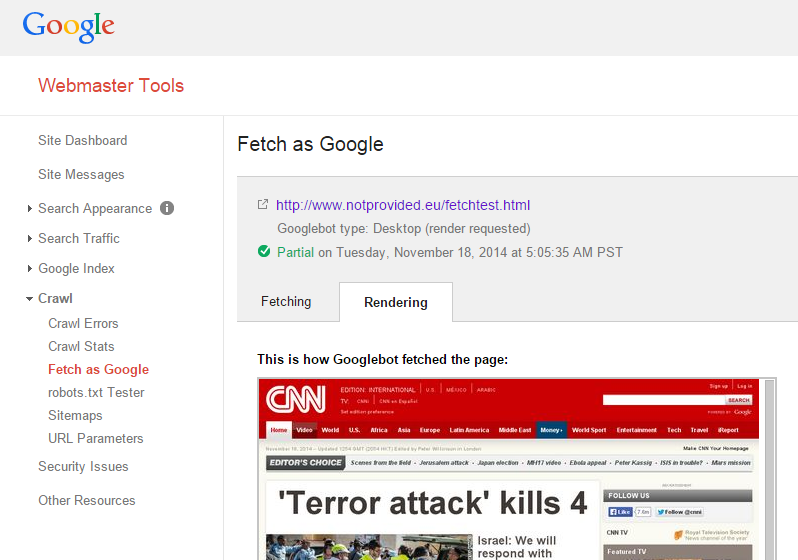

The biggest problem (after the problem with their data quality) I am having with Google Webmaster Tools is that you can’t export all the data for external analysis. Luckily the guys from the FMiner.com web scraping tool contacted me a few weeks ago to test their tool. The problem with Webmaster Tools is that you can’t use web based scrapers and all the other screen scraping software tools were not that good in the steps you need to take to get to the data within Webmaster Tools. The software is available for Windows and Mac OSX users.

FMiner is a classical screen scraping app, installed on your desktop. Since you need to emulate real browser behaviour, you need to install it on your desktop. There is no coding required and their interface is visual based which makes it possible to start scraping within minutes. Another possibility I like is to upload a set of keywords, to scrape internal search engine result pages for example, something that is missing in a lot of other tools. If you need to scrape a lot of accounts, this tool provides multi-browser crawling which decreases the time needed.

This tool can be used for a lot of scraping jobs, including Google SERPs, Facebook Graph search, downloading files & images and collecting e-mail addresses. And for the real heavy scrapers, they also have built in a captcha solving API system so if you want to pass captchas while scraping, no problem.

Below you can find an introduction to the tool, with one of their tutorial video’s about scraping IMDB.com:

Continue reading