I’ve seen a lot articles describing keyword research lately. Most of them relate to the changes Google has made during the past years, which data sources you should use and how to define your set of keywords and keyphrases you are going to target. One of the articles I like, was the one written by Nick Eubanks: “How To Build a Keyword Matrix” in which he describes how to use a two dimensional table to determine the opportunities within a specific set of keywords. I still haven’t seen any clear evidence that people are using more words per query when using search engines compared to 12 or 24 months ago, but I think this will be the case looking to the way search engines are able to interact with their users nowadays.

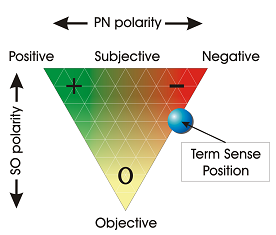

You can imagine the more words a query contains, the easier it is to determine intent, purpose or sentiment and with that you can predict user behaviour. This is what Google started doing with Hummingbird, making sure they understand the user and their actions. To determine possible traffic sources in terms of which keywords to use, it is important to get some insight in user behaviour based on keywords.

Let’s say you have an enormous list of 100.000 keywords you want to determine intent for, which can be done manually or by tagging the keywords with a macro based on the presence of specific words. Keyphrases containing the word “buy” are mostly transactional, since the intent is to buy a product. Queries containing the word “details” are informational, users still are looking for information about specific products. This is a really basic way of identifying your keywords, so I started looking to ways of doing this automatically and in a scalable way.

Automatic keyword processing

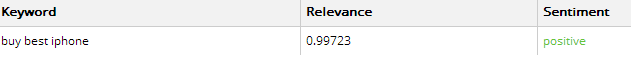

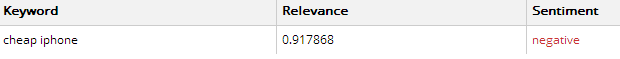

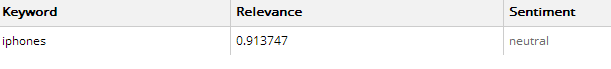

A commercial solutions can be found at http://www.alchemyapi.com/products/demo/ They do have a free API to test, once you have signed up. Try their demo to have an idea how such tools can help you determining relevance and intent just based on a combination of words:

[buy best iphone]

[cheap iphone]

[Compare iPhones]

Another option is building your own tool if you’re able to program yourself 🙂 Google has done a lot of research on machine learning, not only for language processing of course but this was one of their main focus areas in the early days. They actually build a public available solution called Google Prediction API which everyone can use to build their own machine learning systems like spam detection, language processing and sentiment analysis, just to name a few possibilities. The basics of machine learning are easy to understand: “Machine learning focuses on prediction, based on known properties learned from the training data.” So you input training data in your systems, try specific datasets and adjust if is not working properly so it is a continuous process of defining the properties you want the system to use.

First you need to create a Google Developers Console project with both the Google Prediction API and Google Cloud Storage API activated. Once you activated those, just start by picking one of the six available client libraries or use Google’s API explorer: Prediction API v1.6. Once everything is installed execute the following steps:

- Create a CSV file of your training data

- Create a new project in the Prediction API, make sure you fill in your billing information. (100 requests/day are free)

- Upload your CSV to Google Storage

- Go to the Prediction API browser and upload the new training set

- Use the trainedmodel.predict to make predictions 🙂

Or just follow the tutorial on their website “Hello World!” and use classifiers like “Positive Transactional”,”Negative transactional”,”Neutral informational” for your training data. Make sure you understand the difference between queries like [compare cheapest iphones] and [find best iphones] especially since the queries are telling you something about the willingness of the user to spend money and value of that. Based on these kind of assumptions you can create more insight into which keywords are really valuable for your website.

The biggest challenge is building a decent training dataset. For the English language you can also start working with SentiWordNet, which is a English language lexical resource for opinion mining to test with Prediction modelling with Google’s API. You will get the best result by investing some time in building a decent training set, which you can use for every next project.

What are your tips & tricks on the subject automating keyword research?

Prediction API is already on the agenda for 2014 :).

Keep you posted.

What is the relevance to NLP for you?

The way I see NLP is influence the subconscious.

Automating keyword research yes, NLP not sure.

With the Prediction API you will get sentiment based on your own keywords. How are you influencing user behavior in this case?

Basically looking to the possibilities. Is there enough volume and revenue potential for building seperate landingpages designed for specific keyword combination. Cheap doesn’t mean best for everyone, but on the end you can always try to build pages that suit the search query the best way possible. Both from a SEO and user perspective.