Together with Malte Landwehr I did a session during SMX Munich last week about SEO related patents. Why should you look at patents anyway:

- Learn from the past

- Predict future changes

- Get an idea about the inner working of search engines

- Get an idea what certain features take to exist

These are the slides by Malte, who did the first part:

And this are my slides:

During the first part Malte has been discussing several patents that related to different changes Google has been making during the past years. One of the biggest changes Google launched in the last years is the update of their algorithm, named the Hummingbird update. With this update they completely changed the way Google is interpreting the web and their user’s behaviour. Both the understanding of web pages and the queries people are using to find information, have been upgraded to a situation in which Google is able to understand intention and expectation from users. By creating this improved algorithm, Google came a bit closer towards their mission statement:

“Google’s mission is to organize the world’s information and make it universally accessible and useful.”

The Hummingbird update is all about the following 5 topics and is basically an update towards semantic search:

- Entities

- Relationships

- (Co-)Citations

- Trust Scores

- Personalization

One of the patents related to the entity and relationship concepts, is a patent that was filed in July 2004 by Anna Patterson: Phrase-based indexing in an information retrieval system This patent described how systems can change keyword based indexing and ranking to a system in which phrase based algorithms are used. This meant that it became more easy to present relevant SERPs based on phrases instead of individual keyword scores. Finally: adding your keywords as many times as possible became less useful. Also present in this patents, are the first signs of predicting user behaviour based on phrase information databases:

“In addition, in some instances a user may enter an incomplete phrase in a search query, such as “President of the”. Incomplete phrases such as these may be identified and replaced by a phrase extension, such as “President of the United States.” This helps ensure that the user’s most likely search is in fact executed.”

There has been some discussion in the market about Hummingbird related patents and one of the patents that is mentioned a lot is Synonym identification based on co-occurring terms because of the description of a system that can revise queries and by doing that optimize algorithmic behaviour and the ability to present more relevant search engine result pages. It’s easy to match this to Hummingbird behaviour, since Google explained one of the key element of the update is:

“In particular, Google said that Hummingbird is paying more attention to each word in a query, ensuring that the whole query — the whole sentence or conversation or meaning — is taken into account, rather than particular words. The goal is that pages matching the meaning do better, rather than pages matching just a few words.” Source: Search Engine Land

Just an example of a query where Google doesn’t match specific keywords to page content, but first looks at the meaning of the query and then replaces it with a relevant synonym: https://www.google.com/#q=car+repair

Together with the addition of the Knowledge graph to the SERPs, Google is able to give answers directly to the user, but it’s not needed to complete type your question anymore because Google is understanding the intention of the specific query: https://www.google.com/#q=cure+hiccups

As mentioned before, patents don’t necessarily have to be integrated in Google’s algorithms, because writing down a concept or idea which can be patented, doesn’t mean it is possible to implement it into real situations at the current time. Because of that, it is always good to monitor patents, just to keep up with possible future additions or development in web search.

Future of web search

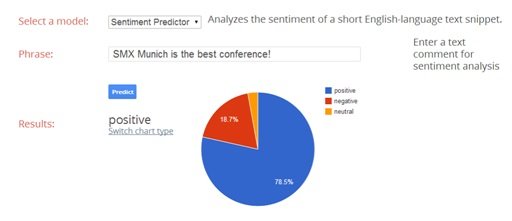

Thinking about the current status of the semantic web, sentiment analysis is a key element in future web search. Identifying subjective information will be an important element in personalising search and extracting social media content in a way it is usable in ranking web pages. Once you’re able to process social media signals based on sentiment, the value of specific social interactions can be calculated more easily. In this case, one of the relevant patents to consider, is Large-scale sentiment analysis (US 7996210 B2) which describes a system and method for large scale sentiment analysis. During their research they were able to analyse the sentiment differences between actors and international criminal figures for example.

The problem with sentiment analysis is that you need to know what set of documents you are using for your analysis. If you only use newspapers, you will get a certain result since journalist aren’t allowed to write anything they want. Especially within countries where the government is having management power in news organisations. If you also take blogs into account when defining your document set, chances are your overall sentiment will be a lot lower. One of the ways to cover this is by building a domain-specific sentiment classifier (US 7987188 B2) instead of looking to complete sets of data.

Google did a lot of research on this topic and they shared some of their research in the form of their Google Prediction API so you can create your own basic sentiment analysis model: Creating a Sentiment Analysis Model You can start with uploading training data for the system and once you have enough training data uploaded, you can built a model on which the Prediction API can actually make predictions, the bigger your training data set will be, the better the predictions will be so make sure you create or buy enough training data.

Local search possibilities

We have been discussing patents by Google, but also Microsoft is doing their research of course. An interesting patent related to Local search is Visualization of top local geographical entities through web search data in which a system is described that analyzes search data to determine popularity of geographic entities. This can be used to rank local business and create maps based on specific demands based on the time and location the user is searching from. Based on direction-related like (to / from etc.) terms Google can offer adequate and relevant maps.

Another interesting patent Google that was filed in 2011, but just published in January 2014 is Transportation-aware physical advertising conversions The patent is discussing a system which does real-time calculations on a possible offering of transportation based on the consumer’s most likely route and form of transportation (train, taxi, rental car etc.). Companies can set up advertisements based on a AdWords like bidding system but companies could also offer a free taxi if people search for specific company names. This an interesting opportunity for brick-and-mortar shops, to attract possible customers by offering an useful service.

How about Google Now?

The personal assistant Google Now was launched on July 9, 2012 during the update of Android 4.1. The system recognizes repeated actions that are performed on the user’s device and presents more relevant information in the form of so-called “cards”. These specialized cards contain all kinds of information, ranging between time reminders, stock information and nearby events. The whole idea behind Google Now is that Google is able to predict user behaviour based on concepts instead of keywords.

The aim for Google is to be able to present information without a user having to search for it. In December 2012 Google got granted a patent (WO 2012173894 A2) which describes a system that is able to automate personalized reaction to user behaviour. In an interview, Ray Kurzweil, states that he is convinced that within 15 years, Google will have created a system that is capable of understanding natural language and human emotion. You can read this interview at Google’s Ray Kurzweil predicts how the world will change

Doing something useful

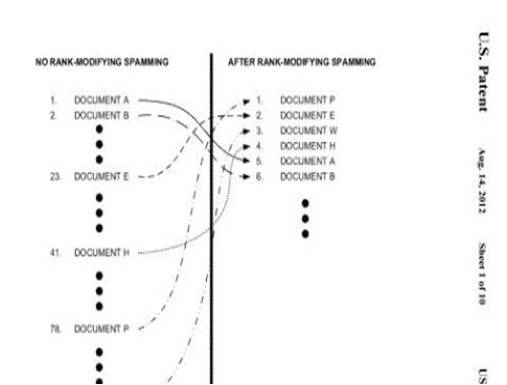

To end this discussion about patents, I would like to point out one funny patent by Google: Ranking Documents This document describes a few ways in which the search engine might respond when it believes there is a possibility that a website is not compliant with Google’s search quality guidelines. They apply filtering in a way that the person who is responsible for spamming, is not expecting it. This could result in decreasing rankings before it starts increasing because of specific changes that have been made to the website. Just a way Google is able to play with spammers.